Tim Weninger is associate professor of computer science and engineering at the University of Notre Dame.The opinions expressed in this commentary are his own.

Tim Weninger is associate professor of computer science and engineering at the University of Notre Dame.The opinions expressed in this commentary are his own.

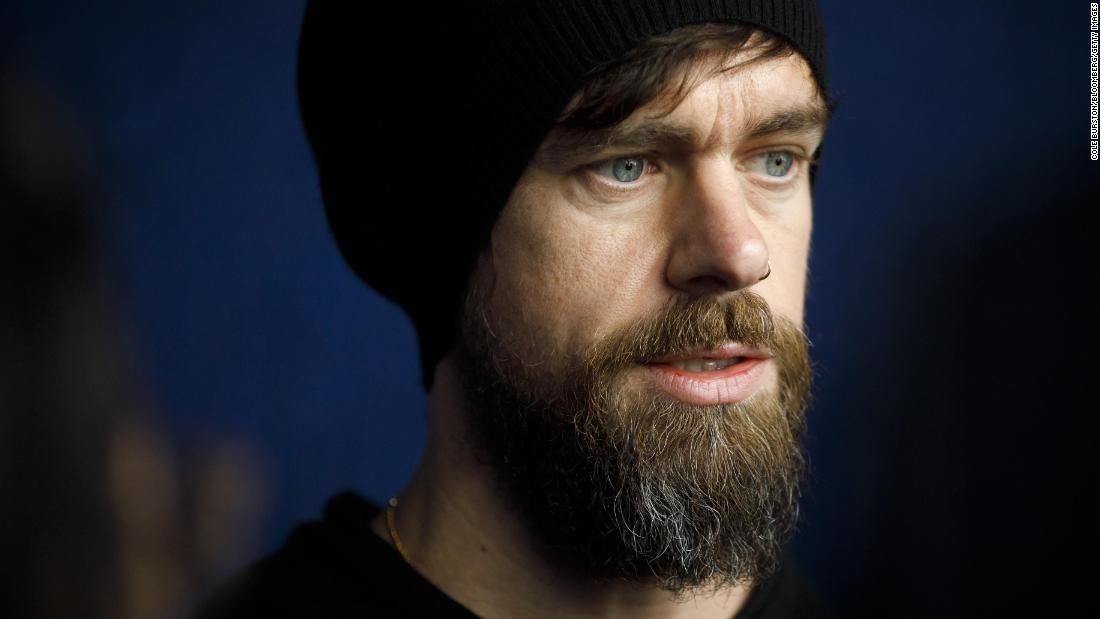

Last month, Twitter CEO Jack Dorsey announced that the social network will no longer permit political ads to run on the site.Dorsey noted that a political message should be earned — not bought — and acknowledged the challenges that political ads online pose when it comes to misleading information, deepfakes and targeted messaging.Twitter announced additional details on the policy on Friday, saying it would indeed allow certain groups to advertise on political issues.

But removing politicians’ ads is just a small step toward fighting the broader problem of disinformation.

Do political campaigns spend money to spin news stories about their opponents? Of course they do.

And the ability for a campaign to hyper-optimize and micro-target false narratives to specific groups of social media users erodes our ability to make informed decisions and weakens our democracy.Other social media platforms, like Facebook — which has been criticized for not fact-checking political ads posted on its site — and Instagram, should follow Twitter’s lead.Either social media companies should choose to fact-check ads that they wish to run or decline to run them altogether.

Doing nothing is reckless and further endangers social discourse.But paid posts are a miniscule portion of the political content that is shared on social media.Instead, the vast majority of political content that circulates on these platforms are unpaid posts.Most troubling is that many of these unpaid posts originate from malicious groups posing as legitimate activists or news agencies, and these fake organizations wield a lot of influence on these platforms.More Tech & Innovation Perspectives Facebook’s Libra cryptocurrency is a tool for empowerment.Not a threat

I’m developing AI that can read emotions.

It’s not as creepy as you think.

Big Tech can’t be trusted.It’s time for regulation

For instance, Russia’s Internet Research Agency (IRA), the organization widely blamed for spreading disinformation during the 2016 presidential election, only spent $100,000 to purchase nearly 3,400 Facebook and Instagram ads in the months leading up to the election.Now compare those 3,400 ads with the “61,500 Facebook posts, 116,000 Instagram posts, and 10.4 million tweets,” that the IRA made and posted while pretending to be regular users, according to the Senate’s Select Committee on Intelligence .The committee argues that “an emphasis on the relatively small number of advertisements, and the cost of those advertisements, has detracted focus from the more prevalent use of original, free content via multiple social media platforms.” Read More Even more alarming: Many prominent Americans, including Trump campaign officials and critics, promoted this IRA-created content, which made it look that much more legitimate.And there is thus far no evidence that these Americans knew the accounts were tied to Russia.The overwhelming presence of non-paid, politically charged memes, videos and clickbait stories dwarfs the impact of sponsored political ads.Simply put, without even realizing it, regular users can easily spread disinformation.

Scientific research is becoming clear on this point.My own lab’s study of social media users found that 73% of all likes and shares, contributed by real, actual humans, occurred without the user ever reading the article — they never even clicked on the link.Social media users are mostly headline browsers: We scroll through our newsfeed, find something that amuses or angers us, and without considering the content or consequences, we spread it.So it’s no wonder that political disinformation spreads so quickly.

We’re often doing the sharing.So what should be done? There are dozens of ideas being promoted as solutions to the problem of online disinformation.California’s new bot disclosure law , for example, makes it illegal for anyone to pose online as a bot with the intent to mislead someone else, and several countries have recently passed laws to prohibit the creation of disinformation.But research shows that the role of bots in spreading disinformation is minor compared to the regular sharing done by regular users.And giving governments the power to decide fact from fiction — and what can be published and what cannot — carries significant risks to free speech and the free press.

Fact-checking is another common refrain.But fact-checking takes time.

A fake story can be created, go viral and be forgotten by the time a fact-checker has time to even consider it.Even if there was an instantaneous, super-AI fact-checking system, what would we do with it? There is some evidence that tagging fake posts has some small effect in limiting how often they are shared, but in most practical cases fact-checking does too little too late.Social media companies rely, almost entirely, on users for their content.And it’s clear that these platforms are doing a lousy job at asking their users for quality content.

If social media companies are serious about stopping the spread of disinformation, then they should encourage their users to be more thoughtful about the content that we post and share.There are countless ways to do this.Most of us aren’t willing participants in malicious disinformation campaigns.So, in addition to the ongoing efforts to demonetize websites that promote disinformation and take down hateful content, social media platforms should provide better tools to the users to help make better social sharing decisions.For example, many social platforms allow users to report posts that they believe to be intentionally spreading disinformation.It’s unclear how effective or widely used these systems are, but according to The Washington Post , Facebook keeps a trustworthiness score for every user in order to gauge the credibility of a user report.

Keeping this score secret rightfully protects it from abuse, but surely Facebook could let us know a little about how we’re doing.Why not notify me if an article that I shared yesterday was fact-checked to be false or misleading? Such a feature would challenge me to think twice about sharing salacious or misleading content next time.Despite their best efforts, so far it appears that social media companies have invented and discovered dozens of ways to not fix the problem.There is no clear solution here.But any kind of progress will have to include a broad recognition that it is the users, individually and collectively, who need to fix the newsfeed.Updated: This article has been updated to reflect new details that Twitter released about its political ad policy..